Discover the importance of understanding AI models to ensure reliable outcomes that can help foster trust between humans and machines

Are you using Artificial Intelligence (AI) solutions to solve business problems in your industry? From customer interactions through chatbots to diagnosing diseases in patients, AI is rapidly transforming how organizations operate. But creating these predictive models is one part of the problem; another is persuading organizations and individuals that the Machine Learning (ML) models are trustworthy and responsible for the results they produce.

For example, a healthcare ML model analyzes a patient and detects tumors, and suggests a treatment method. How can doctors trust the output and start with treatment? How do you explain the logic of the AI decision to the patient? How do you create trust and reliability in your AI solutions?

Global AI Adoption Index 2022 by IBM states that a majority of IT professionals deploying AI (93%) agree that being able to explain how their AI arrived at a decision is important to their company

We will discuss how to create an explainable AI model by understanding the logic behind your AI solutions, where you can ensure your customers and stakeholders to trust your AI outcomes.

Explainable Artificial Intelligence (XAI)

In general, some AI software models working logic can be understood at any stage of the program. For example, linear regression models, decision trees, semantic models, etc.

Predictive models of today may find patterns or solutions solely based on the data presented, without any human interaction. These complex models whose logic cannot be defined by humans are called “black box models”. In these models, the relationship between inputs and outputs is hard to explain.

Hence, explainability is an essential component of AI/ML models. Explainability techniques can help make these models more explainable and understandable to all stakeholders.

PwC 2022 AI Business Survey states that around 52% of leaders mentioned that they will focus on developing and deploying AI models that are interpretable and explainable.

The term explainable AI (XAI) was initially coined by DARPA. It is a set of techniques and practices defined to explain model functionality and its behavior at any stage of its life cycle. This means that the models are properly tested and can help in facing AI risks like bias, accuracy, discrimination, etc.

Business Value of XAI

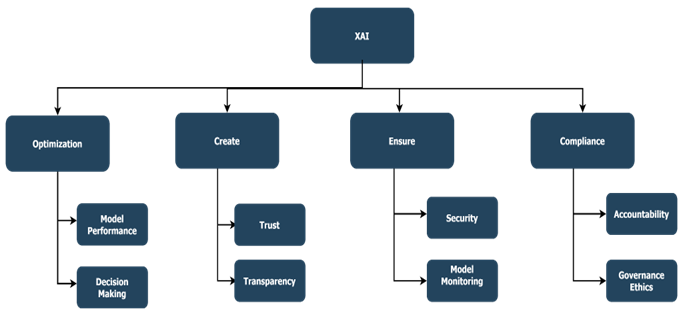

XAI is a subset of AI that provides a more comprehensive and understandable explanation of an AI system’s decision-making process. XAI is becoming increasingly important as AI systems become more complex and are used to make decisions that have a large impact on business operations and customer experiences. The XAI models can help accelerate your business by

- Accountability: XAI models can provide insights into the rationale behind decisions and the decision-making process, which can help businesses quickly identify mistakes and areas for improvement. These insights can encourage decision-makers to take responsibility for their actions, which will result in better choices and more effective operations.

- Compliance: XAI models can help accelerate your business with compliance by making data and decisions more transparent, enabling a better understanding of how decisions were made, and providing audit trails. XAI models can check for bias and discrimination beforehand and ensure data privacy and security with a modal compass in training.

- Increased business strategies: XAI models can help accelerate businesses by providing the ability to explain their machine learning models and the decisions by uncovering insights that would otherwise be hidden from human-level analysis, enabling them to make more informed decisions and strategies. For example, customer feedback ML models can help in analyzing consumer behavior towards the products and help businesses take appropriate decisions

- Decision Making: XAI models provide transparency into the decision-making process, allowing businesses to make more informed decisions and adjust strategies more quickly. XAI models can detect trends and patterns in data more quickly and accurately than traditional models, identifying opportunities and risks before they become too expensive to handle.

Figure 2. Shows the dimensions XAI can add value to business

XAI: From Theory to Practice

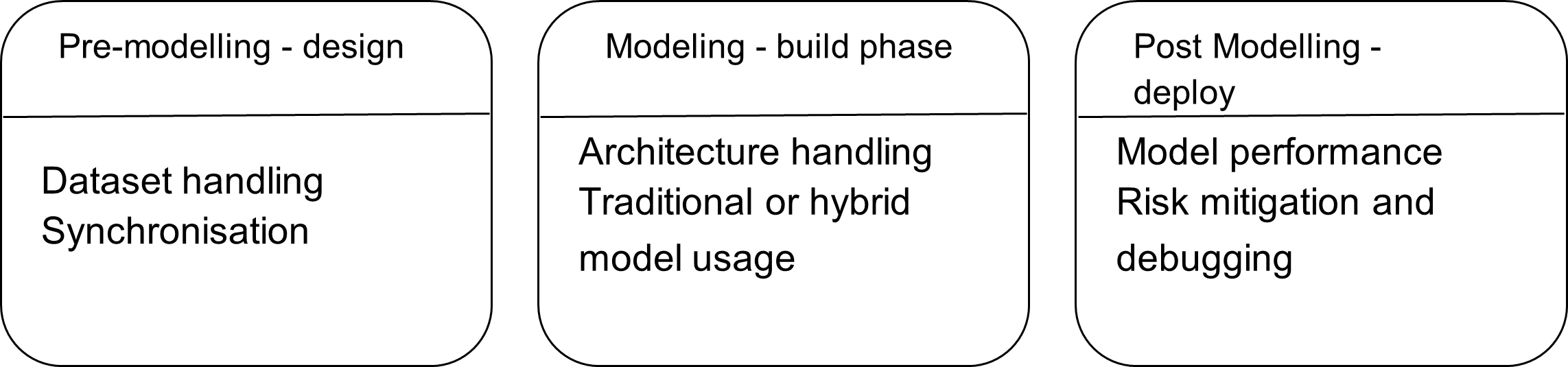

Organizations must invest in the right tools to ensure the explainability of their models. It is critical to plan for explainability along with the model design and to integrate it into every stage of the ML life cycle. To ensure transparency and reduce risks, business managers should understand the limitations of unexplained models and set XAI objectives accordingly.

Having a holistic strategy that covers the needs of developers, consumers, and businesses is essential, as is creating proper XAI metrics with checkpoints for human accountability and intervention. Furthermore, organizations must have proper documentation procedures for all the models, to ensure legal and ethical compliance.

Figure 1. Shows three stages where explainability can be included in the ML life cycle.

Creating proper XAI metrics with various checkpoints for human accountability and intervention when needed can increase transparency. This can help in performing backward propagation methods to analyze the model by a bottom-up approach. This also increases model performance, debugging, and root causes analysis.

Future scope

Transparency is becoming increasingly important for businesses to remain competitive and maximize their ROI and profits. The future of explainable models will be instrumental in shaping the AI industry. Once you understand the behavior, we can prevent wrong predictions on the business side and provide more reliable AI to end users.

Models should not rely on any one particular explainability technique and must also be able to represent multi-model explanations. For example text, images, audio, etc.

Businesses now use external SaaS providers in addition to explaining their own models. Understanding the model’s objectives and taking them in a broader context are essential steps in choosing an explainability strategy and model that are better suited to the business.

The U.S. National Insitute of Standards and Technology(NIST) has included explainability as one of the nine factors to measure AI trust and fairness.

CONCLUSION

The XAI is an active field of research because of regulatory challenges and consumer expectations. For humans to trust AI, the models must be able to present themselves. Organizations that are confident with their explainable models, can increase trust between AI and consumers and promote their business.

Overall, explainable AI can provide a great competitive advantage for businesses, enabling them to stay ahead of the competition and better serve their customers.