With the increasing adoption of AI models, the success of an organization’s AI initiatives lies not only in developing powerful models but also in effectively managing and deploying them. Two critical practices have emerged to effectively manage and operationalize these models: ModelOps and MLOps.

Model Ops is a broader discipline that encompasses MLOps, as well as the management of all AI models, their governance, and compliance, while MLOps is more focused on the automation and efficiency of the models.

In this blog post, let us demystify ModelOps and MLOps, explore their distinct roles in the AI lifecycle, and gain insights into how these practices drive innovation, scalability, and operational excellence.

MODEL OPS

Definition and Scope:

Model Ops can be defined as the set of activities focused on the operationalization and management of machine learning models by establishing robust frameworks and procedures to ensure seamless integration of models into production environments. Model Ops enhances the accuracy, efficiency, and scalability of machine learning initiatives.

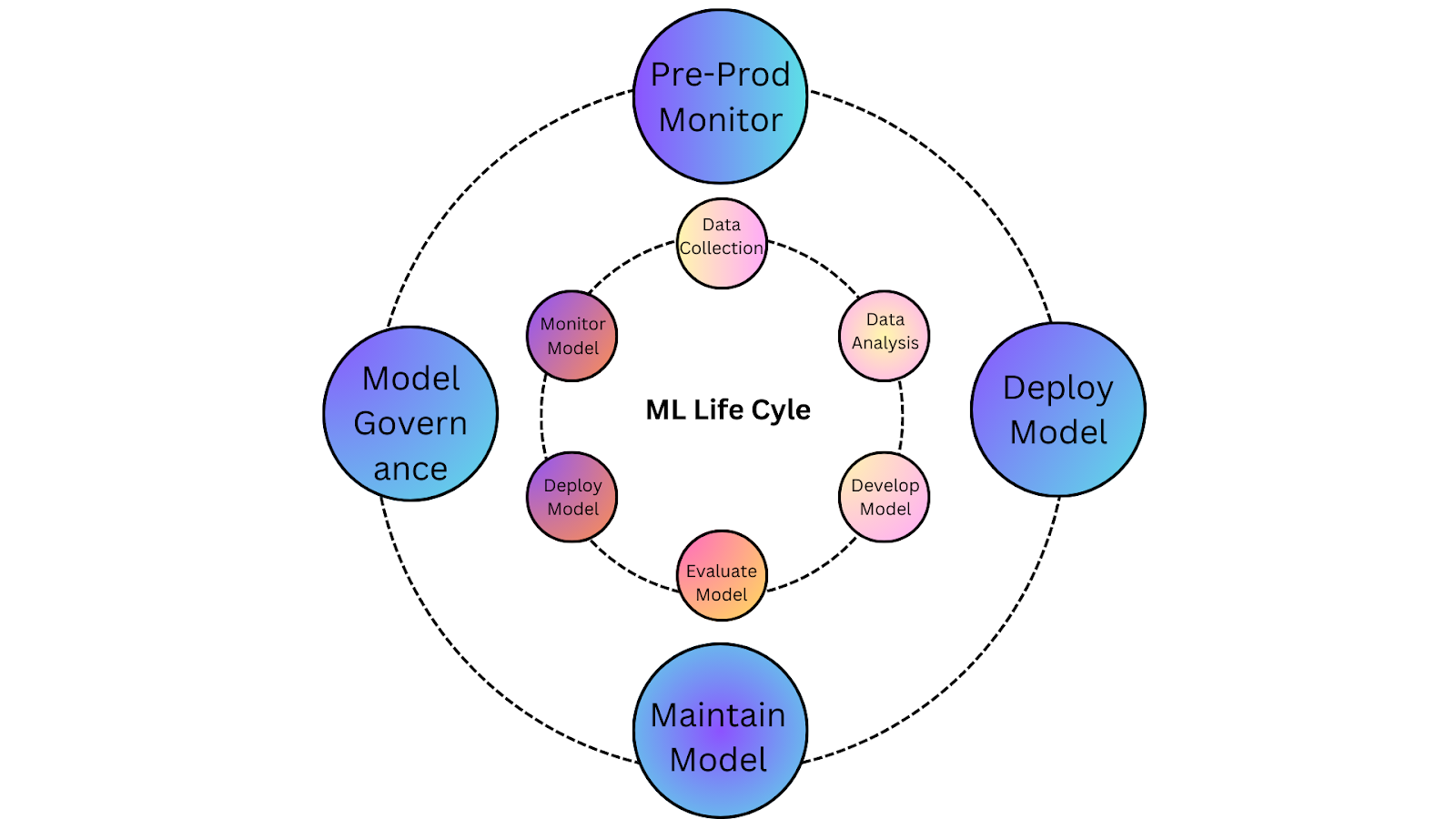

Figure 1. Shows Model Ops Life Cycle

Model Development and Training:

One crucial aspect of Model Ops is facilitating efficient model development and training by working closely with data scientists and ML engineers to streamline the iterative process of creating and fine-tuning models.

Monitoring Model Performance:

Once deployed, models need to be closely monitored to assess their accuracy, detect anomalies, and identify potential performance degradation. Model Ops enables timely detection and mitigation of issues, ensuring that models consistently deliver reliable results.

Establishing a Feedback Loop:

Organizations can iteratively refine and enhance their machine-learning algorithms by collecting relevant data and feedback from the deployed models. This feedback loop ensures that models evolve and adapt to changing data patterns, ultimately improving their predictive capabilities.

Scalability and Flexibility in Model Deployment:

One of the significant advantages of implementing Model Ops is the ability to scale model deployment efficiently with the necessary infrastructure and processes to deploy models across various production environments seamlessly. This scalability allows businesses to leverage their models effectively across different departments, products, or even customer segments.

ML OPS

Definition and Scope:

ML Ops focuses on operationalizing ML models by bridging the gap between data scientists and operations teams. It encompasses the processes, tools, and practices required to facilitate seamless collaboration, automation, and reproducibility throughout the ML lifecycle.

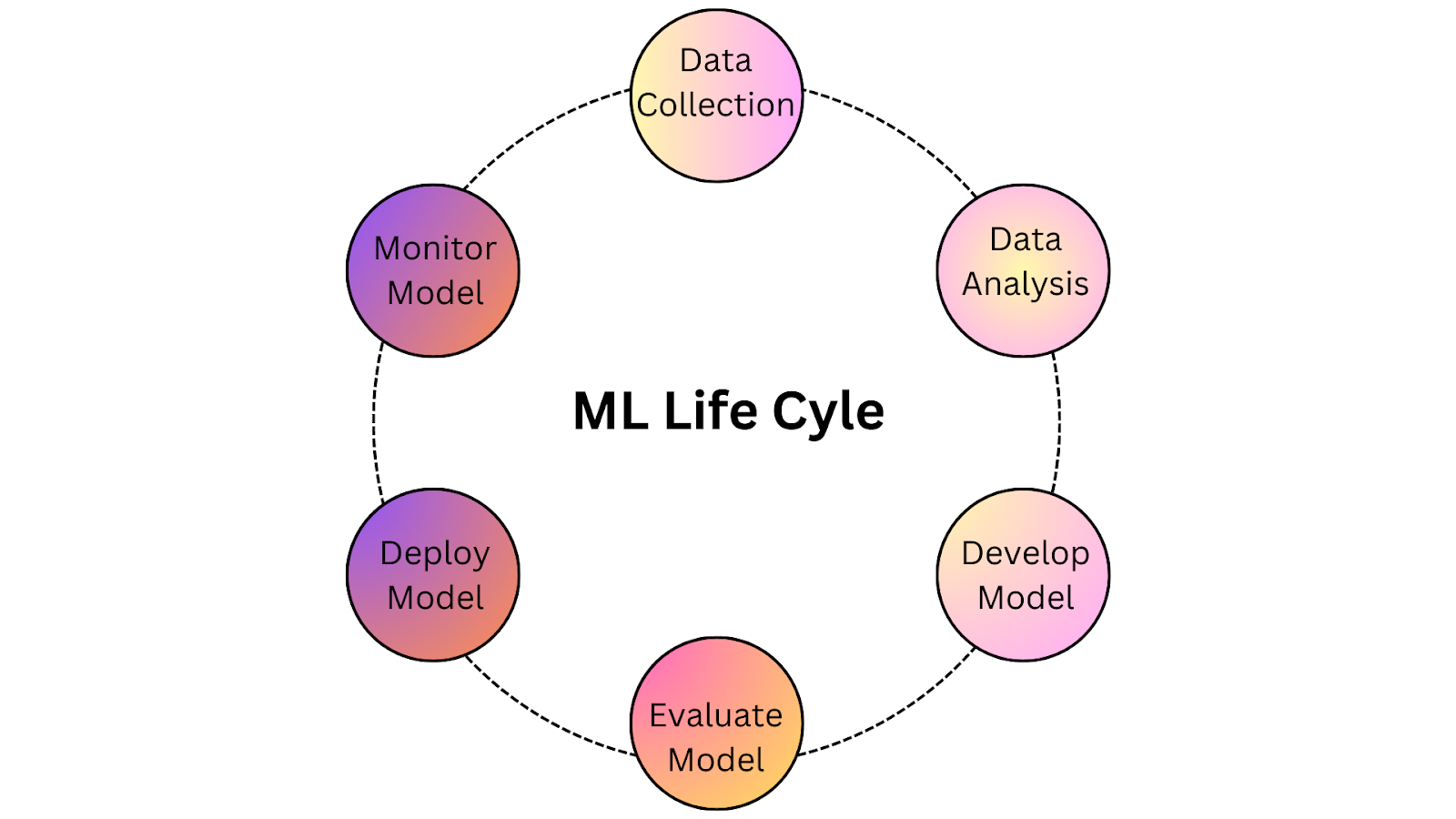

Figure 2. Shows ML model life cycle

Model Development and Training:

ML Ops provides standardized workflows and version control mechanisms. It enables data scientists to experiment, iterate, and improve models more efficiently, ensuring reproducibility and reducing time to deployment.

Monitoring Model Performance:

ML Ops establishes monitoring systems that continuously track various metrics, such as accuracy, latency, and resource utilization to ensure early detection of anomalies and iterative model improvements.

Feedback Loop and Model Iteration:

ML Ops facilitates a feedback loop between data scientists and operations teams, enabling seamless collaboration and gaining insights to refine models, retrain them with new data, and continuously improve their accuracy and efficacy.

Scalability and Flexibility in Model Deployment:

ML Ops leverages automation and containerization technologies to efficiently deploy models across different environments, such as on-premises or cloud infrastructure, and adapt to changing business need quickly.

Model Ops Vs ML Ops

| ACTIVITY | MODEL OPS | ML OPS |

| Data Preparation | Collaborates with ML Ops to ensure that data is clean, well-formatted, and ready for analysis. | Automates infrastructure and tooling for efficient data preparation such as data wrangling and feature engineering. |

| Model Training | Optimize model training workflows and resource allocation using a variety of techniques, such as supervised learning, unsupervised learning, and reinforcement learning. | Automates the process of model training, such as hyperparameter tuning and model selection for scalable and reproducible model training. |

| Model Evaluation | Assesses and evaluate the performance of ML models and identify areas for improvement. | Automates the process of model evaluation, such as scoring and calibration. |

| Model Deployment | Manages the deployment of trained models in production environments. | Automates the process of creating and managing ML pipelines for seamless model deployment. |

| Model Monitoring | Collaborates with ML Ops to track the performance of models in production and set up monitoring systems to ensure that model performance is maintained. | Automates monitoring systems and processes such as anomaly detection and root cause analysis to track model performance. |

| Model Governance | To establish governance policies and procedures to ensure that ML models are used in a responsible and ethical manner for model management. | Ensures that ML models are used in a responsible and ethical manner. |

Table 1. Shows the relationship between Model Ops and ML Ops

When to opt for Which?

When it comes to managing and operationalizing machine learning (ML) models, each approach has its own strengths and considerations, depending on the specific requirements and context of the ML projects.

Factors to Consider when Opting for Model Ops

- Size and complexity of AI initiatives: If the organization primarily focuses on deploying and managing a small number of ML models, Model Ops can provide a streamlined approach to ensure that your models are developed, deployed, and maintained in a consistent and efficient manner.

- Governance and compliance: For organizations preferring a lean and agile approach, model ops provides regulations and compliance rules emphasizing managing the operational aspects of individual models, allowing teams to quickly iterate, deploy, and monitor models with minimal overhead.

- Collaboration between teams: Model Ops facilitates collaboration between data scientists and IT professionals to break down the silos between data scientists and IT professionals to ensure that everyone is working towards the same goals.

Factors to Consider when Opting for ML Ops

- Size and complexity of ML Initiatives: ML Ops is well-suited for large-scale ML initiatives that involve multiple models and complex workflows. It provides a comprehensive framework to manage the end-to-end ML lifecycle, from data preparation to model deployment, monitoring, and governance, ensuring scalability and consistency.

- Collaboration and Reproducibility: ML Ops emphasizes collaboration and reproducibility across teams that need to work together efficiently, ML Ops provides the tools and practices to foster collaboration, version control, and documentation, ensuring transparency and reproducibility across the ML workflow.

- Scalability and Automation: If the organization plans to deploy and manage a large number of models across different environments and data sources, ML Ops can provide the necessary infrastructure, automation, and scalability to handle the complexity.