Utilize the power of Responsible AI to advance your company and gain a competitive advantage over competitors.

Organizations are increasingly leveraging Artificial Intelligence (AI) to create value and increase productivity. AI decisions are increasingly impacting our daily lives on a large scale.

The AI market is expected to add $15.7 trillion to the Global Gross Domestic Product by 2030

Regardless of the limitless potential they cause, there are also many unexpected outcomes like bias, discrimination, fairness, compliance, etc. This has increased the pressure on organizations and governments who are held accountable for consumers’ questions about AI compliances, governance, trust, and data security and privacy.

As a result, organizations have started to scale their AI with a more practical and accountable approach. By implementing RAI principles, organizations can ensure that they are leveraging AI in an ethical and responsible manner.

This is where Responsible AI comes into the picture. So, what is RAI, its principles, and how to implement them for your organization?

What is Responsible AI

Organizations must ensure that their AI models are reliable, trustworthy, and safe for public use. Responsible AI (RAI) is a standard for ensuring that models are safe, robust, explainable, interpretable, and ethical. For this, the organizations build a framework with pre-defined principles, ethics, and governance rules around their AI models starting from the design phase.

GPT-3 language model with the largest neural network, began to respond with insulting comments and thoughts without any accountability for bias.

This example proves the point that as AI technology evolves exponentially, there occurs a greater responsibility for organizations to make sure that their models are more reliable and safe for public use and must be responsible for the actions it produces.

Business Value of Responsible AI

AI systems become more rational and trustworthy as a result of the various practices that responsible AI integrates into them. It makes the technology safer and more reliable. This provides business owners and SaaS vendors the confidence to present and persuade end users of their business models. Initiatives must be taken at the early stages of AI adoption to protect the business from risks and protect the reputation and minimalizing losses. The responsible AI framework differs for each project and care should be taken to customize and suit all the needs.

The system must be explainable in its functional and technical aspects. Fairness in algorithms created is the most challenging area for businesses to focus on. There are many tools and methods available that can help in identifying the risks and solving them with the help of root-cause analysis. This makes the algorithm more technically robust.

In general, in the “black-box” algorithms where predictions become more accurate based on the training data, the algorithm’s accuracy increases but the transparency of the model decreases. This can be solved to an extent by diversifying the training data and hyperparameters defined. There has to be a balance between creating a responsible AI framework and actually implementing it. This solely depends on the business group of the organization.

Implementing Responsible AI

In order to ensure risk mitigation and governing models with greater security, it is important to analyze and implement RAI models. Companies such as Microsoft and Google have developed their own RAI frameworks, while the European Commission has published requirements to create a trustworthy AI that takes correct decisions based on the predicted outcome. By building a model and RAI framework that abides by these principles, we can ensure our organization is able to identify potential threats and eliminate them.

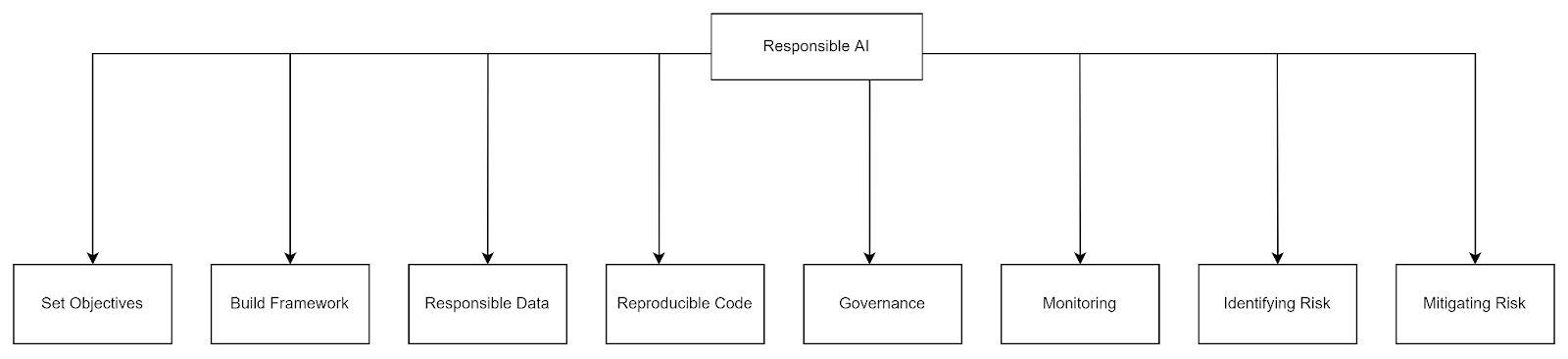

Figure 1. Shows steps involved in creating a responsible AI

Set up a clear objective that clearly defines the business scope and checkpoints that make sure the working model is aligned with the design perspective. Understand the need for interpretability and explainability for your model.

Build a framework along with the model building that documents all the relevant information in detail, which can help in understanding the model. There are various tools available in the market that serve this purpose.

Identify the possible types of risk that can affect the model and create proper rules that can monitor and eliminate them at the entry level and provide scope for root-cause analysis that helps in mitigating them.

Try creating components that are reproducible and can be implemented to create robust models irrespective of the input structure and parameters provided to the model. Reproducibility is one of the key factors in creating a responsible AI.

Responsible AI Tool Kits

Organizations have unique challenges to overcome across the ML life cycle and must create an RAI framework to suit their specific needs. This framework must document the roles and responsibilities of teams, and promote increased transparency. Some of the widely used tools are

- TensorFlow Federated

TTF is an open-source framework for machine learning models and non-learning models like federated analytics. Developers can experiment with new algorithms or test the algorithms on their models and data using its multi-machine simulation runtime. This makes it an ideal tool for conducting experiments and getting insights into your machine-learning models.

- AI Fairness 360

This open-source library offers both fairness metrics and bias mitigation algorithms that can help organizations understand and examine risks, bias, and discrimination and mitigate them throughout the ML life cycle. Leveraging these tools, organizations can ensure their ML models are fair, equitable, and ethical.

- Responsible AI Toolbox

Microsoft’s RAI Toolbox is an open-source framework designed to facilitate the development, deployment, and assessment of machine learning projects. It provides best-in-class tools for error analysis, interpretability, and counterfactual analysis, empowering organizations to develop ML solutions confidently.

- eXplainability ToolBox

XAI is an open-source framework created by the Institute of Ethical AI & Machine Learning based on their eight principles of RAI. This tool helps in performing bias evaluation, identifying disparity among the ML models, and monitoring them using the “tools and process” approach. - DALEX

DALEX or Model Agnostic Language for Exploration and explanation is a package of DrWhy.ai (collection of tools for XAI). This tool helps to explain any model behavior and its working functionality. The models can be explored and compared with other models in the library.

Conclusion

Creating an RAI is essential for any organization that is looking to create a robust, fair, secure, and trustworthy AI system. Along with the government regulations in place, organizations must devise their own practices that can also bring positive impacts to a business. Establishing a good reputation and brand name that is synonymous with responsible AI can give organizations a competitive edge.

Though there are principles and toolkits available to help build an RAI, organizations must take proactive steps to ensure they are implemented in practice from the beginning of their ML journey. This will ensure long-term success and the best possible results from their Responsible AI model monitoring and management.

Read Other Blog Post

AI governance is not an option