Drift is one of the main factors affecting model performance.

Business decision-making is increasingly dependent on ML models. These models are trained using historical datasets called training or source data based on the fundamental presumption that “data patterns repeat themselves”. But the business environment constantly evolves and the real-world data drift far away from the trained data.

So, the accuracy of the deployed ML models will decrease over time. Drift in AI/ML models has grown to be a significant problem as more and more machine learning models are deployed and used in live environments for practical applications.

In this blog, let us understand what is drift, the types of drifts affecting our ML, and how to identify and overcome them.

What is Drift

Machine Learning (ML) utilizes predictive modeling analysis to produce a mapping function (f) that can accurately forecast output values (y) based on input data (x).

Y= f(X)

This algorithm is designed to work in a statistical function mode using a training dataset to make predictions about future datasets. However, changes in the environment, target variables, relationships between parameters, and unexpected issues such as the COVID-19 pandemic can all lead to a phenomenon known as Model Drift. This is where the prediction accuracy of the model is no longer accurate due to new input variables or data.

Figure 1 shows the model performance drift

Causes of Drift

Drift in machine learning can be a major cause of decreased model performance and can happen for a variety of reasons. However, most causes of drift generally fall into two main categories: bad training data and changing environments.

Bad training data can come from a variety of sources, including incorrect data, incorrect processing steps, duplicate data, and missing data. This can lead to inaccurate predictions and a decrease in model performance. Additionally, sampling mismatches, such as sampling selection bias or non-stationary environments, can cause data to drift as well. For example, a model to predict winter fashion trends in tropical countries can’t rely only on the training dataset of cold countries.

Changing environments can also affect model performance. Unexpected external events can mix up data, causing scope changes that are out of our control. Additionally, changes to feature values can alter the meaning and value of data. For example, a visa immigration model deployed 5 years ago, will not have a “COVID vaccination” category in its checklist.

Overall, it is important to be aware of the various causes of drift in machine learning, as it can lead to decreased model performance. Knowing how to identify and address these issues is key to creating effective and accurate models.

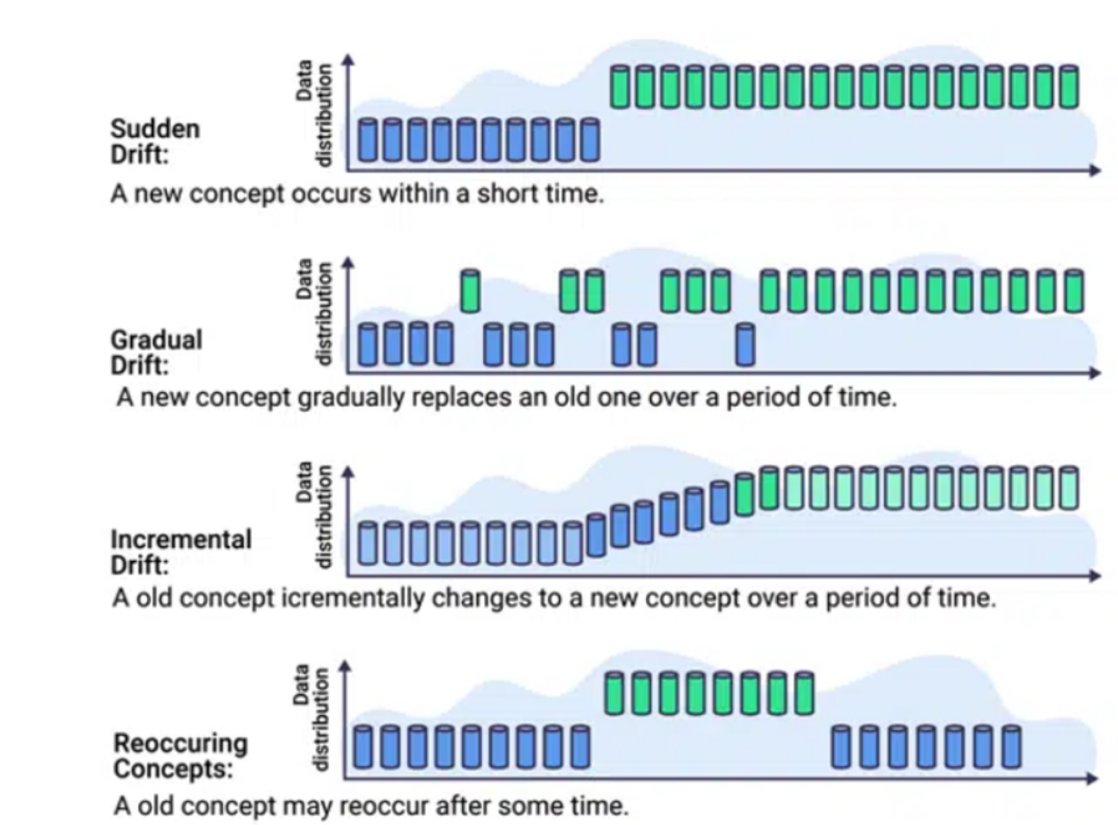

Figure 2 shows some concept drift that occurs based on transition speed

Ways to overcome Drift

Detecting the model drift is the first step. Once the type of drift and its frequency is spotted, the next step is to overcome the drift and increase the performance of the model. Some of the commonly used approaches are

1. Periodical Retraining

The timescale of retraining will depend on the complexity of the model and how often the underlying data changes. Ultimately, the frequency of retraining should be determined based on the performance of the model. If the model’s performance is degrading over time, then the model should be retrained more frequently.

2. Regularization

Regularization is a technique used to reduce the complexity of a model by adding constraints to the model weights. This reduces the influence of less important features and helps prevent the model from overfitting or drifting away from the original data.

3. Ensemble Approach

This method aims at improving the overall performance by combining the decisions from multiple models and detecting domain drift. There are simple ensemble and more advanced ensemble techniques available which can be chosen based on the approach required or the context in which the shift occurs.

4. Cross-validation

This technique is used to evaluate a model’s performance by splitting the dataset into multiple subsets and training the model on each subset. This helps to ensure that the model is robust against changes in the underlying data distribution and can lead to more accurate predictions.

Conclusion

Drift is a critical issue for data scientists and ML engineers that can affect the performance of models and their credibility. To overcome drift, teams should understand the patterns, divergence rate, and context of drift and apply the appropriate techniques to retrain the model and eliminate drift.

However, with the right tools and platform solutions, it can be managed effectively. With dataset monitors and integrated model monitoring, teams can monitor model performance, detect drift, and set up auto-retraining of models to keep them up-to-date and accurate.